How to use different compression algorithms/tools within Zmanda.

Table of Contents

1. How to enable compression

2. About compression

3. Recommended algorithms

4. Scenario-based recommendations

5. Client-side vs server-side compression

How to enable compression in Zmanda

1. Login to the ZMC

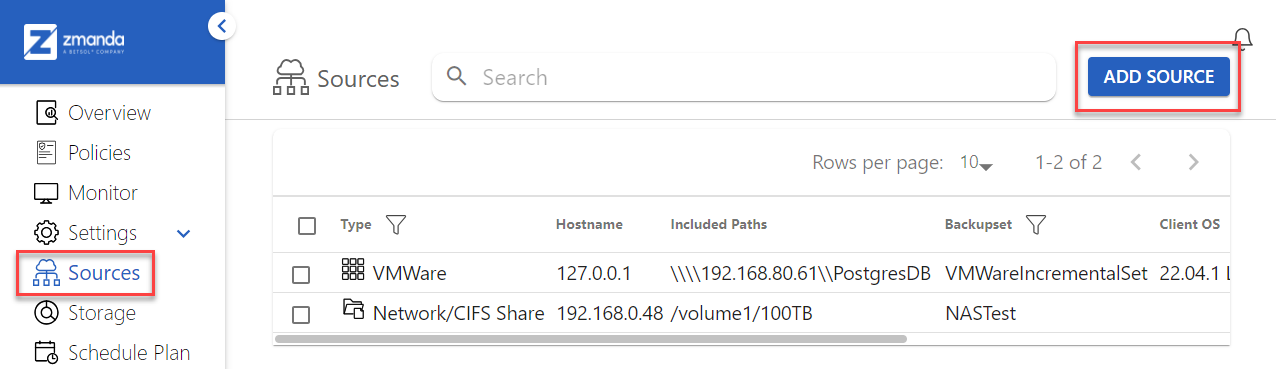

2. Go to Sources > ADD SOURCE > and choose the source you would like to create

3. Under Compression Strategy choose any of the predefined options, or you can choose a custom strategy for the server or for the client.

Note: Client-side compression is only available for sources that have Zmanda Agents installed. For example, VMWare does not support client-side compression. Additionally, custom client-side compression is only available for Linux-based sources

4. For custom client-side compression, you must install the desired compression tool on the Linux-based client machine. For example, if you wish to use zstd use the below commands:

//For Debian-based systems

apt install zstd

//For RPM-based systems

yum install zstd

5. You can now specify the install path in the Custom Client Compression field

6. That's it! To configure custom server-side compression, follow the same steps on the Backup Server

About Compression

Compression algorithms operate on the data itself, regardless of the file system storing the data.

They work by analyzing the data and finding patterns or redundancies to represent the information more efficiently. This process is agnostic to the file system, as it focuses on the content of the data rather than the underlying storage system.

The performance of compression operations can be influenced by factors such as the speed of the storage medium, overall system performance (like CPU, Memory), and the size and complexity of the data being compressed. These factors can affect the speed at which data is read from or written to the file system, indirectly impacting the compression process.

When choosing a compression algorithm you should consider the algorithms' appetite for CPU overheads, the number of CPU threads available, and the compression ratio desired. All of these factors affect the data that will be transmitted over the network and the speed of the compression/decompression process.

Recommended Algorithms

Zstandard (Zstd): Zstd is a newer compression algorithm that offers a good balance between compression ratio and performance. It provides fast compression and decompression speeds and is often favored for its efficiency.

//For Debian-based systems

apt install zstd

//For RPM-based systems

yum install zstd

LZ4: This algorithm is known for its high compression and decompression speeds with low CPU overhead. It provides good performance, especially for real-time workloads or systems with limited computational resources.

//For Debian-based systems

apt install lz4

//For RPM-based systems

yum install lz4

Gzip: Gzip offers a higher compression ratio compared to LZ4 but may have higher CPU overhead and slower performance. It provides a good balance between compression efficiency and speed.

//For Debian-based systems

apt install gzip

//For RPM-based systems

yum install gzip

Pigz: pigz, which stands for parallel implementation of gzip, is a fully functional replacement for gzip that exploits multiple processors and multiple cores to the hilt when compressing data.LZJB: LZJB is a lightweight compression algorithm that provides modest compression ratios with very low CPU overhead. It is suitable for scenarios where minimizing CPU usage is a priority.

//For Debian-based systems

apt install pigz

//For RPM-based systems

yum install pigz

Bzip2: bzip2 offers a good balance between compression ratio and speed. It provides high compression ratios at the expense of slower compression and decompression speeds. While it requires more computational resources compared to faster algorithms, its memory usage and CPU performance are generally moderate. It is commonly used when a good compression ratio is desired and when the trade-off in speed is acceptable for the given use case.

//For Debian-based systems

apt install bzip2

//For RPM-based systems

yum install bzip2

Lzma or XZ: Lzma compression excels in achieving high compression ratios but at the cost of slower compression and decompression speeds and higher memory usage. It is commonly used when file size reduction is a priority and when the trade-off in performance is acceptable given the specific use case.

//For Debian-based systems

apt install lzma

//For RPM-based systems

yum install lzma

The table below gives an apples-to-apples comparison of various compression algorithms in terms of Speed, Compression ratio, CPU Usage, and Memory Usage. Higher numbers are better.

Comparison of Compression Algorithms using a Scale of 1 to 10

|

Algorithm |

Speed |

Compression Ratio |

CPU Usage |

Memory Usage |

|

zstd |

8 |

9 |

7 |

6 |

|

lz4 |

9 |

6 |

8 |

3 |

|

pigz |

8 |

7 |

8 |

5 |

|

gzip |

5 |

4 |

5 |

2 |

|

bzip2 |

4 |

8 |

6 |

4 |

|

lzma (XZ) |

3 |

9 |

9 |

7 |

Note: Values can vary based on multiple factors like the type of data and environmental factors.

The values for each algorithm are given in reference to the values of the other algorithms in the table. In other words, the values do not give any information as to what the speed, compression ratio, CPU usage, or memory usage actually are. Rather, they should be used to compare one algorithm to another.

Scenario-Based Recommendations

Zmanda recommends Zstd for most cases since it is one of the most balanced algorithms in terms of speed, compression ratio, and hardware utilization.

Large Datasets (5TB+)

Large datasets typically favor compression algorithms with higher compression ratios, parallel processing support, and streaming-friendly algorithms. We recommend Zstd or Gzip in case the system doing the compression has multiple CPUs, and if the compression ratio is more important than CPU usage.

Small Datasets (<5TB)

Small datasets typically favor lightweight, fast algorithms with minimal overhead.

In these cases, we recommend LZ4, LZJB, or Zstd.

ZFS Filesystems

ZFS is a robust file system that includes built-in support for data compression. Zstd is one of the best compression algorithms recommended for use with ZFS, along with other options such as LZ4, Gzip, and LZJB.

NFS and SMB File Shares

For file shares like NFS and SMB, the focus is generally on optimizing network bandwidth and reducing data transfer time. Client-side encryption with algorithms having high compression ratios help in these cases by reducing the amount of data that needs to be transmitted over the network. We recommend Zstd, Gzip or pigz in such cases.

SSD vs HDD

When it comes to choosing a compression algorithm for solid-state drives (SSDs) versus hard disk drives (HDDs), the primary consideration is often the trade-off between compression ratio and CPU utilization. The performance characteristics of compression algorithms may affect SSDs and HDDs differently due to their inherent hardware differences. SSDs are known for their high read and write speeds, but they have limited endurance for write operations compared to HDDs. In general, SSDs benefit from compression algorithms that prioritize low CPU utilization, as this reduces the computational overhead and helps maintain high throughput.

LZ4 and LZJB are commonly recommended for SSDs due to their low CPU utilization and fast compression/decompression speeds. These algorithms can leverage the SSD's fast I/O capabilities without significantly impacting performance.

In the case of HDDs, the primary consideration is often maximizing the compression ratio to reduce storage requirements. HDDs have slower sequential and random-access speeds compared to SSDs, so the focus is on optimizing storage efficiency rather than minimizing CPU utilization. Algorithms such as Zstd or Gzip, which offer higher compression ratios but require more CPU resources, may be more suitable for HDDs.

Recommendation Conclusion

It can be beneficial to benchmark different compression algorithms with your specific datasets and environments to determine the most suitable choice for your use case.

In general, we recommend Zstd and that you only choose between other options if you have specific requirements and constraints that need to be accounted for.

Client-Side vs Server-Side Compression

You may choose between client-side or server-side compression. Usually, data that is already compressed on the client is not compressible a second time, hence we only provide the option to choose between one or the other.

Enabling client-side compression has the benefit of reducing the volume of data being transferred over the network at the cost of higher overheads on the client system.

If reducing overheads on client systems is a higher priority when compared to conserving network bandwidth, it makes sense to choose server-side encryption instead.